!pip install openai -qqqGiving an LLM the capability to call some external function based on the user’s input and receive the results back is a very powerful pattern and a key element behind the rapid rise of agentic workflows.

This pattern powers many of the features we see on ChatGPT today, such as web search, code execution, image generation, or personalized memory based on conversation history.

LLM providers expose this as tool use or function calling. We provide all the function signatures and parameters as JSON Schema and can later call the implementation in any programming language.

For example, we can write a JSON schema to provide a simple add function to OpenAI as shown below.

def add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + bAt first, we convert the function into a JSON Schema showing the name of the function, the description of what it does, and the name and type of all the parameters that it can take.

tools = [

{

"type": "function",

"function": {

"name": "add",

"description": "Adds two integers together",

"strict": True,

"parameters": {

"type": "object",

"required": ["a", "b"],

"properties": {

"a": {"type": "integer", "description": "The first integer to add"},

"b": {

"type": "integer",

"description": "The second integer to add",

},

},

"additionalProperties": False,

},

},

}

]Then, we can provide our schema as a list of tools and send a user query.

from openai import OpenAI

client = OpenAI()

messages = [{"role": "user", "content": "Add 2 and 3"}]

completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=messages,

tools=tools,

)The model then decides that it wants to call the add function with the parameters a=2 and b=3

tool_call = completion.choices[0].message.tool_calls[0]

tool_call.functionFunction(arguments='{"a":2,"b":3}', name='add')

tool_call.function.name'add'

We can fetch the arguments to be passed to the function as shown below

import json

args = json.loads(tool_call.function.arguments)

args{'a': 2, 'b': 3}

Then we call our function with those arguments and get a result

result = add(**args)

print(result)5The result is sent back to the LLM context as a separate message and it will generate a natural language response as a reply for the next turn.

messages.append(completion.choices[0].message)

messages.append(

{

"role": "tool",

"tool_call_id": tool_call.id,

"content": str(result),

}

)

completion_after_tool_call = client.chat.completions.create(

model="gpt-4o-mini",

messages=messages,

tools=tools,

)

completion_after_tool_call.choices[0].message.content'The sum of 2 and 3 is 5.'

Now, the question becomes: how can we automatically convert Python functions into JSON Schemas?

In this post, I will go over various runtime introspection features that Python provides to extract pretty much everything about a function definition. Then we will use that knowledge to build automatic function to json schema converters.

Object Introspection

Let’s understand the various introspection features step by step.

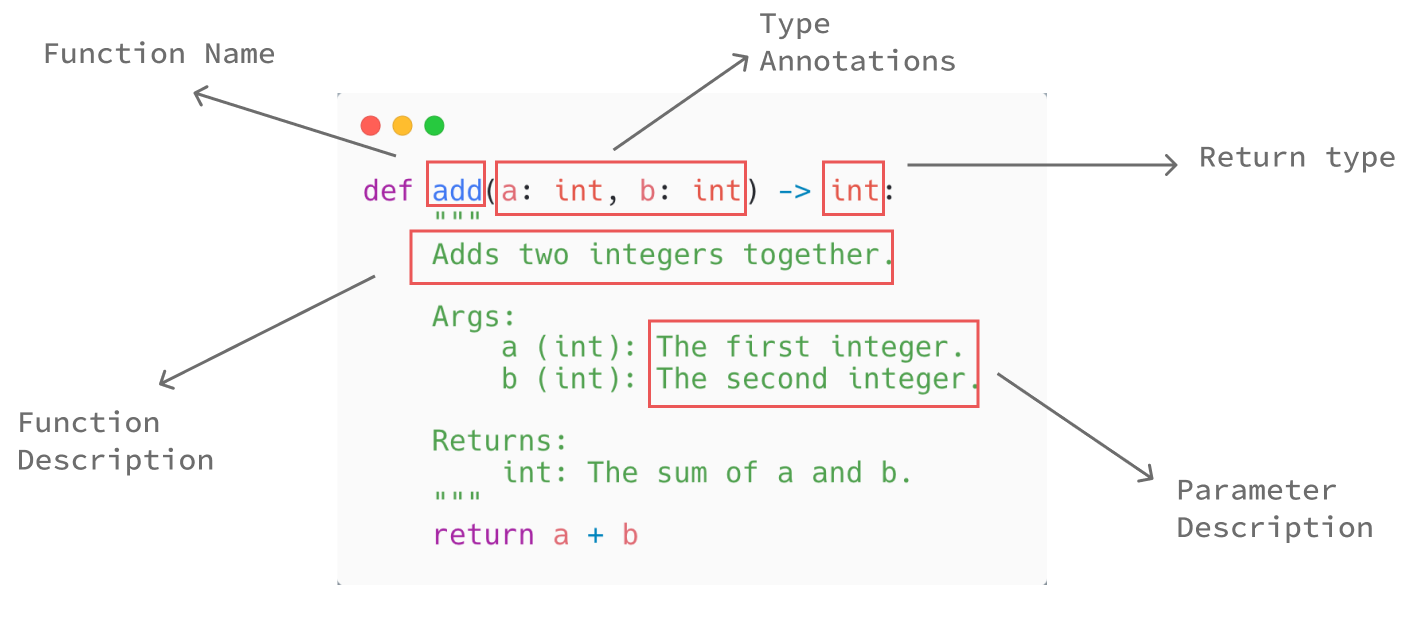

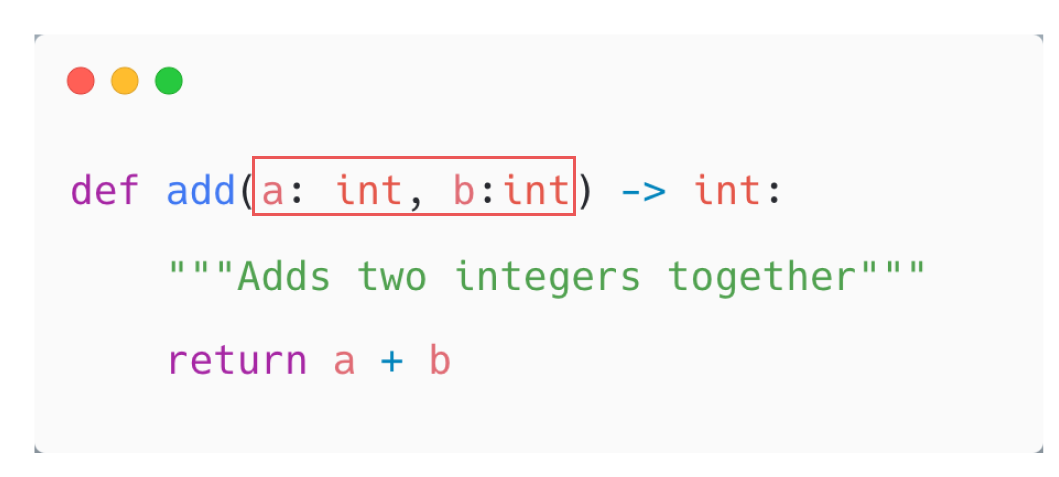

Extracting the parameters and type-annotations

To get the parameters of the function, we can use the signature function of the inspect module.

def add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + bThis will return the entire signature for both the input parameters and the return type.

import inspect

signature = inspect.signature(add)

signature<Signature (a: int, b: int) -> int>

We can get a dictionary of the parameters of the function from the signature

signature.parametersmappingproxy({'a': <Parameter "a: int">, 'b': <Parameter "b: int">})

We can access each parameter from the dictionary. It will return an object that has many useful properties

a = signature.parameters["a"]

a<Parameter "a: int">

We can now easily access the name of the parameter, its default value as well as the type annotation.

print("Name of parameter: ", a.name)

print("Default value: ", a.default)

print("Type annotation: ", a.annotation)Name of parameter: a

Default value: <class 'inspect._empty'>

Type annotation: <class 'int'>This means that if a parameter has a default value of inspect._empty, it’s a required parameter`

a.default == inspect._emptyTrue

The type annotation is of particular interest to us. It will return the type directly

a.annotation<class 'int'>

a.annotation == intTrue

We can also get the type annotation for the return statement i.e. output of the function using the signature itself

signature.return_annotation<class 'int'>

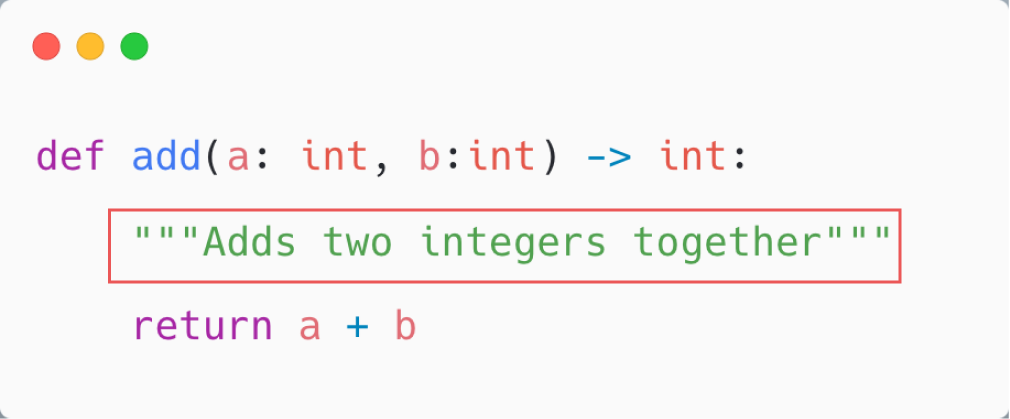

Extracting the docstring

To get the docstring, we can use the __doc__ attribute in the function

def add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + badd.__doc__'Adds two integers together'

An alternate approach is to use inspect module itself.

import inspect

inspect.getdoc(add)'Adds two integers together'

Extracting the function name

This is relatively simple as python already provides a __name__ attribute on each function.

def add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + badd.__name__'add'

Extracting the parameter descriptions from the docstring

We can make use of a third-party library called docstring_parser as the format of docstrings can vary a lot.

!pip install docstring_parser -qqqdef add(a: int, b: int) -> int:

"""

Adds two integers together.

Args:

a (int): The first integer.

b (int): The second integer.

Returns:

int: The sum of a and b.

"""

return a + bfrom docstring_parser import parse

doc = parse(add.__doc__)

{param.arg_name: param.description for param in doc.params}{'a': 'The first integer.', 'b': 'The second integer.'}

Functions to JSON Schema

With the above background knowledge, we have everything needed to convert the function definition to JSON Schema.

Let’s see how this is applied in various popular agent libraries.

Approach 1: Pure Python

This is the approach implemented in the OpenAI Swarm library. In this, we can use all introspection feature discussed above to write the conversion function from scratch.

!pip install git+https://github.com/openai/swarm.git -qqqdef add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + bSwarm has a utility function called function_to_json that converts a python function into a JSON schema.

from swarm.util import function_to_json

function_to_json(add){ 'type': 'function', 'function': { 'name': 'add', 'description': 'Adds two integers together', 'parameters': { 'type': 'object', 'properties': {'a': {'type': 'integer'}, 'b': {'type': 'integer'}}, 'required': ['a', 'b'] } } }

As seen above, we first need some mapping to convert the parameter types from Python to the equivalent JSON schema data type.

| python | json_schema |

|---|---|

| str | string |

| int | integer |

| float | number |

| bool | boolean |

| list | array |

| dict | object |

| None | null |

Based on this, the implementation is quite simple and reuses all the concept we discussed before.

We take the function signature and extract the parameter types for each paramter as well as get the function name and docstring. Using this, we construct the JSON Schema at the end.

# Source: https://github.com/openai/swarm/blob/9db581cecaacea0d46a933d6453c312b034dbf47/swarm/util.py#L31

import inspect

def function_to_json(func) -> dict:

# A mapping of types from python to JSON

type_map = {

str: "string",

int: "integer",

float: "number",

bool: "boolean",

list: "array",

dict: "object",

type(None): "null",

}

try:

signature = inspect.signature(func)

except ValueError as e:

raise ValueError(

f"Failed to get signature for function {func.__name__}: {str(e)}"

)

parameters = {}

for param in signature.parameters.values():

try:

param_type = type_map.get(param.annotation, "string")

except KeyError as e:

raise KeyError(

f"Unknown type annotation {param.annotation} for parameter {param.name}: {str(e)}"

)

parameters[param.name] = {"type": param_type}

required = [

param.name

for param in signature.parameters.values()

if param.default == inspect._empty

]

return {

"type": "function",

"function": {

"name": func.__name__,

"description": func.__doc__ or "",

"parameters": {

"type": "object",

"properties": parameters,

"required": required,

},

},

}- 1

- Get the function signature

- 2

- For each parameter, convert the type annotation to valid JSON type. Default to string if user didn’t specify a type

- 3

- Find out which parameters are required

- 4

- Extract the function name

- 5

- Extract the docstring

function_to_json(add){ 'type': 'function', 'function': { 'name': 'add', 'description': 'Adds two integers together', 'parameters': { 'type': 'object', 'properties': {'a': {'type': 'integer'}, 'b': {'type': 'integer'}}, 'required': ['a', 'b'] } } }

Approach 2: Pydantic

2a. Dynamic Models

I first came across this approach in Jeremy Howards’s talk and this pattern is also implemented in popular libraries like LlamaIndex and LangChain under the hood.

Pydantic is a popular python library already used for data validation and serialization of structured data. As such, it can convert a Python class into a JSON schema directly.

For example, if we were to define a Pydantic model for our add function manually, it would look something like below.

from pydantic import BaseModel

class Add(BaseModel):

a: int

b: int

Add.model_json_schema(){ 'properties': {'a': {'title': 'A', 'type': 'integer'}, 'b': {'title': 'B', 'type': 'integer'}}, 'required': ['a', 'b'], 'title': 'Add', 'type': 'object' }

But, we actually want to create the Pydantic data model dynamically. This is possible via the create_model function provided by Pydantic. It takes the name for the model as the first argument, and then the named paramters for the different fields in the model.

Here a=(int, ...) means that the field a is of type int and is required.

from pydantic import create_model

a = create_model("Add", a=(int, ...), b=(int, ...))

a.model_json_schema(){ 'properties': {'a': {'title': 'A', 'type': 'integer'}, 'b': {'title': 'B', 'type': 'integer'}}, 'required': ['a', 'b'], 'title': 'Add', 'type': 'object' }

Thus, if we can somehow create a dictionary of our function parameters, then we can pass that using the **kwargs trick and then get the JSON schema directly.

from pydantic import create_model

a = create_model("Add", **{"a": (int, ...), "b": (int, ...)})

a.model_json_schema(){ 'properties': {'a': {'title': 'A', 'type': 'integer'}, 'b': {'title': 'B', 'type': 'integer'}}, 'required': ['a', 'b'], 'title': 'Add', 'type': 'object' }

Below, we implement a function that uses this concept to convert the add function into JSON Schema directly.

We use inspect.signature as before to get all the function parameters and then prepare a Pydantic model directly from it.

import inspect

from pydantic import create_model

def add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + b

def schema(f):

kws = {

name: (

# Get the type annotation

parameter.annotation,

# Check if parameter is required or optional

... if parameter.default == inspect._empty else parameter.default,

)

for name, parameter in inspect.signature(f).parameters.items()

}

# Pass the function name and parameters to get a pydantic model

p = create_model(f"`{f.__name__}`", **kws)

# Convert to JSON Schema

schema = p.model_json_schema()

return {

"type": "function",

"function": {

"name": f.__name__,

"description": f.__doc__,

"parameters": schema,

},

}

schema(add){ 'type': 'function', 'function': { 'name': 'add', 'description': 'Adds two integers together', 'parameters': { 'properties': { 'a': {'title': 'A', 'type': 'integer'}, 'b': {'title': 'B', 'type': 'integer'} }, 'required': ['a', 'b'], 'title': '`add`', 'type': 'object' } } }

2b. Type Adapter

Pydantic introduced a new feature called Type Adapter in version 2.0. It allows you to convert any arbitrary Python object into a Pydantic model.

We can use it to get JSON schema for the function parameters directly without requiring use of inspect.signature.

from pydantic import TypeAdapter

def add(a: int, b: int) -> int:

"""Adds two integers together"""

return a + b

def schema(f):

schema = TypeAdapter(f).json_schema()

return {

"type": "function",

"function": {

"name": f.__name__,

"description": f.__doc__,

"parameters": schema,

},

}

schema(add){ 'type': 'function', 'function': { 'name': 'add', 'description': 'Adds two integers together', 'parameters': { 'additionalProperties': False, 'properties': { 'a': {'title': 'A', 'type': 'integer'}, 'b': {'title': 'B', 'type': 'integer'} }, 'required': ['a', 'b'], 'type': 'object' } } }

Approach 3: Decorators

Most agent libraries wrap conversion approaches like above as decorators (e.g. smolagents) to make them easier to use.

For example, we can make a decorator called tool, which, when applied to a function, will add a json_schema method to that function.

def tool(func):

func.json_schema = lambda: function_to_json(func)

return funcWe can mark out functions with the decorator.

@tool

def add(a: int, b: int) -> int:

"""Adds two numbers"""

return a + bAnd can use the json_schema method to get the schema directly and use it downstream in LLM API.

add.json_schema(){ 'type': 'function', 'function': { 'name': 'add', 'description': 'Adds two numbers', 'parameters': { 'type': 'object', 'properties': {'a': {'type': 'integer'}, 'b': {'type': 'integer'}}, 'required': ['a', 'b'] } } }

Conclusion

Thus, we understood how Python’s runtime introspection enables automatic conversion of function definitions into JSON Schema.