commit arXiv:1810.04805

Author: Devlin et al.

Date: Thu Oct 11 00:50:01 2018 +0000

Initial Commit: BERT

-Transformer Decoder

+Masked Language Modeling

+Next Sentence Prediction

+WordPiece 30K

Amit Chaudhary

May 9, 2020

I recently came across an interesting thread on Twitter discussing a hypothetical scenario where research papers are published on GitHub and subsequent papers are diffs over the original paper. Information overload has been a real problem in ML with so many new papers coming every month.

If you could represent a paper as a code diff, many papers could be compressed down to <50 lines :) The diff would also be more intuitive to read and eval standardized.

— Denny Britz (@dennybritz) April 25, 2020

Some ideas are so different that this wouldn’t apply, but I think it would work well for the majority. https://t.co/JoAcIK9Cm7

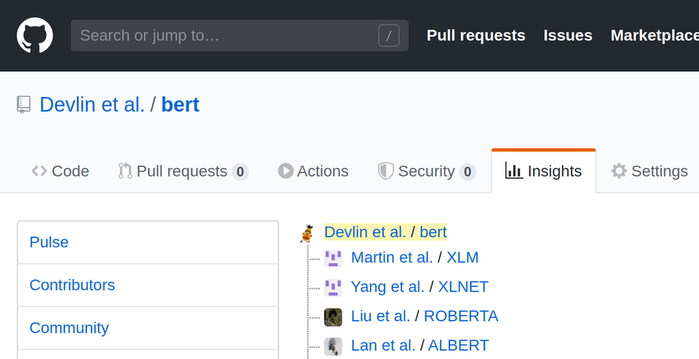

This post is a fun experiment showcasing how the commit history could look like for the BERT paper and some of its subsequent variants.

commit arXiv:1810.04805

Author: Devlin et al.

Date: Thu Oct 11 00:50:01 2018 +0000

-Transformer Decoder

+Masked Language Modeling

+Next Sentence Prediction

+WordPiece 30K

commit arXiv:1901.07291

Author: Lample et al.

Date: Tue Jan 22 13:22:34 2019 +0000

+Translation Language Modeling(TLM)

+Causal Language Modeling(CLM)

commit arXiv:1901.08746

Author: Lee et al.

Date: Fri Jan 25 05:57:24 2019 +0000

+PubMed Abstracts data

+PubMed Central Full Texts data

commit arXiv:1901.11504

Author: Liu et al.

Date: Thu Jan 31 18:07:25 2019 +0000

+Multi-task Learning

commit arXiv:1903.10676

Author: Beltagy et al.

Date: Tue Mar 26 05:11:46 2019 +0000

-BERT WordPiece Vocabulary

-English Wikipedia

-BookCorpus

+1.14M Semantic Scholar Papers(Biomedial + Computer Science)

+ScispaCy segmentation

+SciVOCAB WordPiece Vocabulary

commit arXiv:1906.08237

Author: Yang et al.

Date: Wed Jun 19 17:35:48 2019 +0000

-Masked Language Modeling

-BERT Transformer

+Permutation Language Modeling

+Transformer-XL

+Two-stream self-attention

+SentencePiece Tokenizer

commit arXiv:1907.10529

Author: Joshi et al.

Date: Wed Jul 24 15:43:40 2019 +0000

-Random Token Masking

-Next Sentence Prediction

-Bi-sequence Training

+Continuous Span Masking

+Span-Boundary Objective(SBO)

+Single-Sequence Training

commit arXiv:1907.11692

Author: Liu et al.

Date: Fri Jul 26 17:48:29 2019 +0000

-Next Sentence Prediction

-Static Masking of Tokens

+Dynamic Masking of Tokens

+Byte Pair Encoding(BPE) 50K

+Large batch size

+CC-NEWS(76G) dataset

+OpenWebText(38G) dataset

+Stories(31G) dataset

commit arXiv:1908.10084

Author: Reimers et al.

Date: Tue Aug 27 08:50:17 2019 +0000

+Siamese Network Structure

+Finetuning on SNLI and MNLI

commit arXiv:1909.11942

Author: Lan et al.

Date: Thu Sep 26 07:06:13 2019 +0000

-Next Sentence Prediction

+Sentence Order Prediction

+Cross-layer Parameter Sharing

+Factorized Embeddings

+SentencePiece Tokenizer

commit arXiv:1910.01108

Author: Sanh et al.

Date: Wed Oct 2 17:56:28 2019 +0000

-Next Sentence Prediction

-Token-Type Embeddings

-[CLS] pooling

+Knowledge Distillation

+Cosine Embedding Loss

+Dynamic Masking

commit arXiv:1911.03894

Author: Martin et al.

Date: Sun Nov 10 10:46:37 2019 +0000

-BERT

-English

+ROBERTA

+French OSCAR dataset(138GB)

+Whole-word Masking(WWM)

+SentencePiece Tokenizer

commit arXiv:1912.05372

Author: Le et al.

Date: Wed Dec 11 14:59:32 2019 +0000

-BERT

-English

+ROBERTA

+fastBPE

+Stochastic Depth

+French dataset(71GB)

+FLUE(French Language Understanding Evaluation) benchmark